Over the last few weekends, Horde 6 code has been merged back from the Maintaina fork and from separate contributions to the former Horde development version, “master”. It was time to upgrade the development tool chain.

Back in the Horde 5 days, there was a utility called git-tools developed by Michael Rubinsky. It would checkout all horde library and application repositories of the github organization into a development directory – also called the git tree – and link into into another structure that resembled a regular Horde 5 installation – called the web tree. This required to hardcode some configurations what could otherwise be auto-detected but it allowed to organize the horde code base in a developer friendly flat structure and provide a ready to run test scenario that reflected the latest code changes even before they were committed to the official repositories. In the days of composer, half of what git-tools does is already covered by composer itself. The git-tools shell already had an integration for the horde-components tool which manages releases, change log, housekeeping tasks and some more.

It was obvious that I wanted a similar functionality for the composer-based setups. It took a while to get this right. The good news is I achieved that. Bear with me for another paragraph of incremental learning or skip to the next section with the results.

It’s getting better all the time

Originally I integrated a generator for “vcs” type repositories into the composer.json generation code. But these vcs repositories are relatively slow. This is OK for one or two apps or leave packages but it’s really really slow when you have a list of 80 or 120 dependencies each pulled from a separate vcs repository.

The next iteration involved generating a satis repository as a standin to releasing code into packagist. The satis repository would be updated by any commit or accepted pull request in any of the serviced repositories. Generating the satis repository is not particularly fast but I managed to scope refreshs to the individual component that needed updating. Reading from satis however is way faster than the vcs approach. Keeping an installation up to date with the latest development commits became much more viable. I also figured I could replace an already installed dependency with an actual git repository and composer would update the autoloader as needed. This works as long as you don’t change the autoloader rules, i.e. upgrade a package from PSR-0 to PSR-4.

Back to #1

Checking out individual libraries as root components and doing integration tests in the satis setup worked OK when the focus was on isolating individual pain points and solving bugs. For any development spanning changes on multiple libraries, it did not work out too well. I ended up implementing a new command.

horde-components github-clone-org

This will checkout a developer tree of git repositories containing apps, libraries or themes.

total 752

drwxr-xr-x 188 root root 4096 Jan 12 11:04 ./

drwxr-xr-x 4 root root 4096 Dec 4 17:44 ../

drwxr-xr-x 9 root root 4096 Jan 11 07:50 ActiveSync/

drwxr-xr-x 9 root root 4096 Jan 11 07:50 Alarm/

drwxr-xr-x 8 root root 4096 Jan 11 07:50 ApertureToAnselExportPlugin/

drwxr-xr-x 9 root root 4096 Jan 11 07:50 Argv/

drwxr-xr-x 9 root root 4096 Jan 11 07:50 Auth/

drwxr-xr-x 7 root root 4096 Jan 11 07:50 Autoloader/

drwxr-xr-x 7 root root 4096 Jan 11 07:50 Autoloader_Cache/

drwxr-xr-x 7 root root 4096 Jan 11 07:50 Backup/

drwxr-xr-x 7 root root 4096 Jan 11 07:51 Browser/

drwxr-xr-x 9 root root 4096 Jan 11 07:51 Cache/

drwxr-xr-x 9 root root 4096 Jan 11 07:51 Cli/

drwxr-xr-x 8 root root 4096 Jan 11 07:51 Cli_Application/

drwxr-xr-x 8 root root 4096 Jan 11 07:51 Cli_Modular/

drwxr-xr-x 8 root root 4096 Jan 11 07:52 Compress/

drwxr-xr-x 8 root root 4096 Jan 11 07:52 Compress_Fast/

drwxr-xr-x 8 root root 4096 Jan 11 07:52 Constraint/

drwxr-xr-x 8 root root 4096 Jan 11 07:52 Controller/

drwxr-xr-x 11 root root 4096 Jan 11 07:52 Core/

drwxr-xr-x 8 root root 4096 Jan 11 07:52 Crypt/

drwxr-xr-x 7 root root 4096 Jan 11 07:52 Crypt_Blowfish/

drwxr-xr-x 6 root root 4096 Jan 11 07:52 CssMinify/

drwxr-xr-x 8 root root 4096 Jan 11 07:52 Css_Parser/

...

drwxr-xr-x 7 root root 4096 Jan 11 08:17 Xml_Element/

drwxr-xr-x 7 root root 4096 Jan 11 08:17 Xml_Wbxml/

drwxr-xr-x 7 root root 4096 Jan 11 08:17 Yaml/

drwxr-xr-x 14 root root 4096 Jan 11 07:50 agora/

drwxr-xr-x 17 root root 4096 Jan 11 07:50 ansel/

drwxr-xr-x 21 root root 4096 Jan 11 07:51 base/

drwxr-xr-x 13 root root 4096 Jan 11 07:51 beatnik/

drwxr-xr-x 7 root root 4096 Jan 12 21:33 bundle/

drwxr-xr-x 12 root root 4096 Jan 11 07:51 chora/

drwxr-xr-x 15 root root 4096 Jan 13 16:54 components/

drwxr-xr-x 11 root root 4096 Jan 11 07:52 content/

drwxr-xr-x 2 root root 4096 Jan 11 08:02 dev.horde.org/

drwxr-xr-x 3 root root 4096 Jan 11 08:02 dns/

drwxr-xr-x 15 root root 4096 Jan 11 08:03 folks/

drwxr-xr-x 6 root root 4096 Jan 11 08:03 git-tools/

drwxr-xr-x 12 root root 4096 Jan 11 08:03 gollem/

drwxr-xr-x 9 root root 4096 Jan 11 08:03 groupware/

drwxr-xr-x 13 root root 4096 Jan 11 08:03 hermes/

drwxr-xr-x 6 root root 4096 Jan 11 08:08 horde-installer-plugin/

drwxr-xr-x 7 root root 4096 Jan 11 08:08 horde-support/

drwxr-xr-x 9 root root 4096 Jan 11 08:10 horde-web/

drwxr-xr-x 10 root root 4096 Jan 11 08:10 hylax/

drwxr-xr-x 7 root root 4096 Jan 11 08:12 iPhoto2Ansel/

drwxr-xr-x 14 root root 4096 Jan 11 08:11 imp/

drwxr-xr-x 14 root root 4096 Jan 11 08:12 ingo/

drwxr-xr-x 3 root root 4096 Jan 11 08:12 internal/

drwxr-xr-x 15 root root 4096 Jan 11 08:12 jonah/

drwxr-xr-x 12 root root 4096 Jan 11 08:12 klutz/

drwxr-xr-x 13 root root 4096 Jan 11 08:12 kolab_webmail/

drwxr-xr-x 9 root root 4096 Jan 11 08:12 koward/

drwxr-xr-x 18 root root 4096 Jan 11 08:13 kronolith/

drwxr-xr-x 13 root root 4096 Jan 11 08:13 luxor/

drwxr-xr-x 17 root root 4096 Jan 11 08:13 mnemo/

drwxr-xr-x 18 root root 4096 Jan 11 08:14 nag/

drwxr-xr-x 9 root root 4096 Jan 11 08:14 operator/

drwxr-xr-x 13 root root 4096 Jan 11 08:14 passwd/

drwxr-xr-x 11 root root 4096 Jan 11 08:14 pastie/

drwxr-xr-x 10 root root 4096 Jan 11 08:15 sam/

drwxr-xr-x 13 root root 4096 Jan 11 08:15 sesha/

drwxr-xr-x 11 root root 4096 Jan 11 08:15 shout/

drwxr-xr-x 13 root root 4096 Jan 11 08:15 skeleton/

drwxr-xr-x 8 root root 4096 Jan 11 08:16 timeobjects/

drwxr-xr-x 14 root root 4096 Jan 12 09:08 trean/

drwxr-xr-x 16 root root 4096 Jan 11 08:16 turba/

drwxr-xr-x 11 root root 4096 Jan 11 08:16 ulaform/

drwxr-xr-x 15 root root 4096 Jan 12 11:06 vilma/

drwxr-xr-x 10 root root 4096 Jan 11 08:17 webmail/

drwxr-xr-x 18 root root 4096 Jan 11 08:17 whups/

drwxr-xr-x 15 root root 4096 Jan 11 08:17 wicked/

This tree does not look like a composer based installation and would not easily work in a web browser. I did not want to reinvent composer with its autoloader and other benefits so I wrapped it into an installer. This installer creates a new copy of the horde/bundle base project and registers all the other libraries as a special type of composer repository “path”.

/srv/git/horde/components/bin/horde-components -c ~/horde-testme.conf.php install

[ INFO ] Installation directory is missing: /srv/www/testme

[ OK ] Created installation directory: /srv/www/testme

Creating a "horde/bundle" project at "../../www/testme"

Installing horde/bundle (dev-FRAMEWORK_6_0)

- Installing horde/bundle (dev-FRAMEWORK_6_0): Mirroring from /srv/git/horde/bundle

Created project in /srv/www/testme

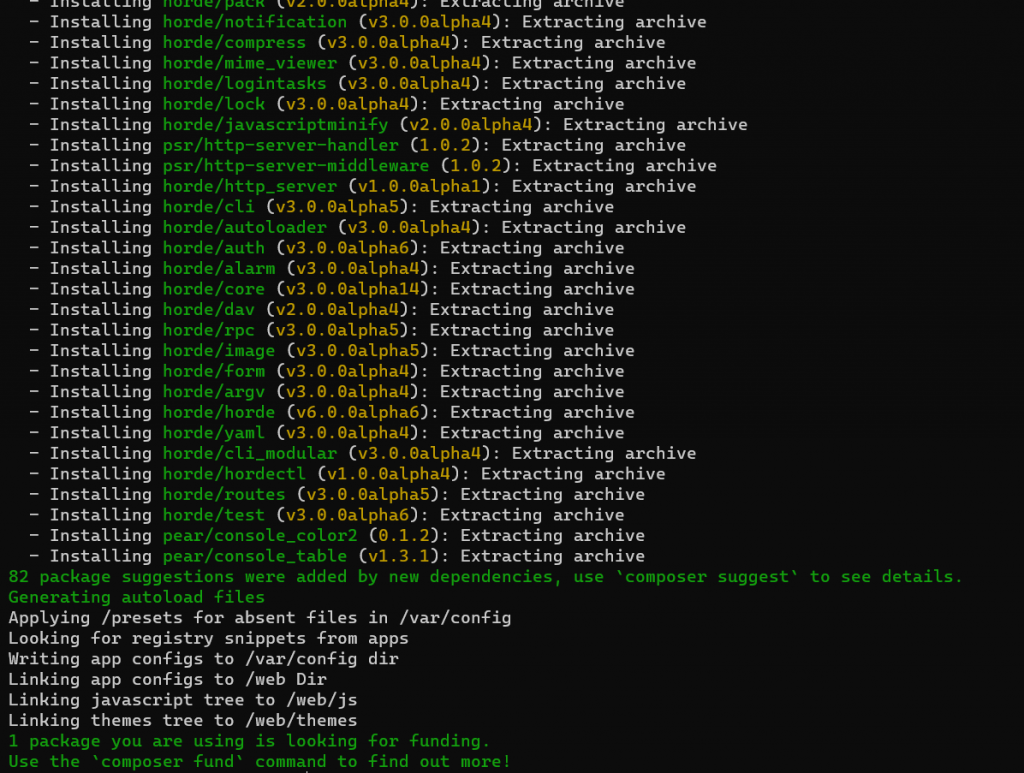

When running the composer install command on this prepared setup, composer will not download the horde components from packagist or github but will use your local checkout. Only external dependencies are still downloaded from the web.

# composer install

No composer.lock file present. Updating dependencies to latest instead of installing from lock file. See https://getcomposer.org/install for more information.

Loading composer repositories with package information

Updating dependencies

Lock file operations: 92 installs, 0 updates, 0 removals

- Locking horde/alarm (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/argv (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/auth (dev-FRAMEWORK_6_0 as 3.0.0alpha7)

- Locking horde/autoloader (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/browser (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/cache (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/cli (dev-FRAMEWORK_6_0 as 3.0.0alpha6)

- Locking horde/cli_modular (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/compress (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/compress_fast (dev-FRAMEWORK_6_0 as 2.0.0alpha5)

- Locking horde/constraint (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/controller (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/core (dev-FRAMEWORK_6_0 as 3.0.0alpha17)

- Locking horde/crypt_blowfish (dev-FRAMEWORK_6_0 as 2.0.0alpha4)

- Locking horde/css_parser (dev-FRAMEWORK_6_0 as 2.0.0alpha5)

- Locking horde/cssminify (dev-FRAMEWORK_6_0 as 2.0.0alpha5)

- Locking horde/data (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/date (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/dav (dev-FRAMEWORK_6_0 as 2.0.0alpha5)

- Locking horde/db (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/exception (dev-FRAMEWORK_6_0 as 3.0.0alpha4)

- Locking horde/form (dev-FRAMEWORK_6_0 as 3.0.0alpha6)

- Locking horde/group (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/hashtable (dev-FRAMEWORK_6_0 as 2.0.0alpha5)

- Locking horde/history (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/horde (dev-FRAMEWORK_6_0 as 6.0.0alpha7)

- Locking horde/horde-installer-plugin (v2.5.5)

- Locking horde/hordectl (v1.0.0alpha4)

- Locking horde/http (dev-FRAMEWORK_6_0 as 3.0.0alpha8)

- Locking horde/http_server (dev-FRAMEWORK_6_0 as 1.0.0alpha2)

- Locking horde/icalendar (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/idna (dev-FRAMEWORK_6_0 as 2.0.0alpha5)

- Locking horde/image (dev-FRAMEWORK_6_0 as 3.0.0alpha6)

- Locking horde/injector (dev-FRAMEWORK_6_0 as 3.0.0alpha11)

- Locking horde/javascriptminify (dev-FRAMEWORK_6_0 as 2.0.0alpha5)

- Locking horde/listheaders (dev-FRAMEWORK_6_0 as 2.0.0alpha5)

- Locking horde/lock (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/log (dev-FRAMEWORK_6_0 as 3.0.0alpha9)

- Locking horde/logintasks (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/mail (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/mime (dev-FRAMEWORK_6_0 as 3.0.0alpha6)

- Locking horde/mime_viewer (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/nls (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/notification (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/pack (dev-FRAMEWORK_6_0 as 2.0.0alpha5)

- Locking horde/perms (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/prefs (dev-FRAMEWORK_6_0 as 3.0.0alpha7)

- Locking horde/routes (dev-FRAMEWORK_6_0 as 3.0.0alpha6)

- Locking horde/rpc (dev-FRAMEWORK_6_0 as 3.0.0alpha6)

- Locking horde/secret (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/serialize (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/sessionhandler (dev-FRAMEWORK_6_0 as 3.0.0alpha3)

- Locking horde/share (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/stream (dev-FRAMEWORK_6_0 as 2.0.0alpha5)

- Locking horde/stream_filter (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/stream_wrapper (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/support (dev-FRAMEWORK_6_0 as 3.0.0alpha6)

- Locking horde/template (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/test (dev-FRAMEWORK_6_0 as 3.0.0alpha7)

- Locking horde/text_diff (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/text_filter (dev-FRAMEWORK_6_0 as 3.0.0alpha4)

- Locking horde/text_flowed (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/token (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/translation (dev-FRAMEWORK_6_0 as 3.0.0alpha3)

- Locking horde/tree (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/url (dev-FRAMEWORK_6_0 as 3.0.0alpha6)

- Locking horde/util (dev-FRAMEWORK_6_0 as 3.0.0alpha8)

- Locking horde/vfs (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/view (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/xml_element (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking horde/yaml (dev-FRAMEWORK_6_0 as 3.0.0alpha5)

- Locking pear/archive_tar (1.4.14)

- Locking pear/console_color2 (0.1.2)

- Locking pear/console_getopt (v1.4.3)

- Locking pear/console_table (v1.3.1)

- Locking pear/pear (v1.10.14)

- Locking pear/structures_graph (v1.1.1)

- Locking pear/xml_util (v1.4.5)

- Locking php-extended/polyfill-php80-stringable (1.2.9)

- Locking psr/container (2.0.2)

- Locking psr/http-client (1.0.3)

- Locking psr/http-factory (1.0.2)

- Locking psr/http-message (2.0)

- Locking psr/http-server-handler (1.0.2)

- Locking psr/http-server-middleware (1.0.2)

- Locking psr/log (3.0.0)

- Locking sabre/dav (4.6.0)

- Locking sabre/event (5.1.4)

- Locking sabre/http (5.1.10)

- Locking sabre/uri (2.3.3)

- Locking sabre/vobject (4.5.4)

- Locking sabre/xml (2.2.6)

Writing lock file

Installing dependencies from lock file (including require-dev)

Package operations: 92 installs, 0 updates, 0 removals

- Installing horde/horde-installer-plugin (v2.5.5): Extracting archive

- Installing horde/util (dev-FRAMEWORK_6_0 as 3.0.0alpha8): Symlinking from /srv/git/horde/Util

- Installing horde/translation (dev-FRAMEWORK_6_0 as 3.0.0alpha3): Symlinking from /srv/git/horde/Translation

- Installing horde/exception (dev-FRAMEWORK_6_0 as 3.0.0alpha4): Symlinking from /srv/git/horde/Exception

- Installing horde/compress_fast (dev-FRAMEWORK_6_0 as 2.0.0alpha5): Symlinking from /srv/git/horde/Compress_Fast

- Installing horde/cache (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Cache

- Installing horde/stream_wrapper (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Stream_Wrapper

- Installing horde/support (dev-FRAMEWORK_6_0 as 3.0.0alpha6): Symlinking from /srv/git/horde/Support

- Installing psr/log (3.0.0): Extracting archive

- Installing php-extended/polyfill-php80-stringable (1.2.9): Extracting archive

- Installing horde/constraint (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Constraint

- Installing horde/log (dev-FRAMEWORK_6_0 as 3.0.0alpha9): Symlinking from /srv/git/horde/Log

- Installing psr/container (2.0.2): Extracting archive

- Installing horde/injector (dev-FRAMEWORK_6_0 as 3.0.0alpha11): Symlinking from /srv/git/horde/Injector

- Installing horde/controller (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Controller

- Installing horde/crypt_blowfish (dev-FRAMEWORK_6_0 as 2.0.0alpha4): Symlinking from /srv/git/horde/Crypt_Blowfish

- Installing horde/url (dev-FRAMEWORK_6_0 as 3.0.0alpha6): Symlinking from /srv/git/horde/Url

- Installing horde/css_parser (dev-FRAMEWORK_6_0 as 2.0.0alpha5): Symlinking from /srv/git/horde/Css_Parser

- Installing horde/cssminify (dev-FRAMEWORK_6_0 as 2.0.0alpha5): Symlinking from /srv/git/horde/CssMinify

- Installing horde/text_flowed (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Text_Flowed

- Installing horde/secret (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Secret

- Installing horde/idna (dev-FRAMEWORK_6_0 as 2.0.0alpha5): Symlinking from /srv/git/horde/Idna

- Installing horde/text_filter (dev-FRAMEWORK_6_0 as 3.0.0alpha4): Symlinking from /srv/git/horde/Text_Filter

- Installing horde/stream_filter (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Stream_Filter

- Installing horde/stream (dev-FRAMEWORK_6_0 as 2.0.0alpha5): Symlinking from /srv/git/horde/Stream

- Installing horde/mime (dev-FRAMEWORK_6_0 as 3.0.0alpha6): Symlinking from /srv/git/horde/Mime

- Installing horde/mail (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Mail

- Installing horde/listheaders (dev-FRAMEWORK_6_0 as 2.0.0alpha5): Symlinking from /srv/git/horde/ListHeaders

- Installing horde/nls (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Nls

- Installing horde/date (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Date

- Installing horde/icalendar (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Icalendar

- Installing horde/browser (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Browser

- Installing horde/data (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Data

- Installing horde/hashtable (dev-FRAMEWORK_6_0 as 2.0.0alpha5): Symlinking from /srv/git/horde/HashTable

- Installing horde/db (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Db

- Installing horde/history (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/History

- Installing horde/view (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/View

- Installing horde/vfs (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Vfs

- Installing horde/tree (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Tree

- Installing horde/token (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Token

- Installing horde/text_diff (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Text_Diff

- Installing horde/serialize (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Serialize

- Installing horde/xml_element (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Xml_Element

- Installing horde/group (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Group

- Installing horde/perms (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Perms

- Installing sabre/uri (2.3.3): Extracting archive

- Installing sabre/xml (2.2.6): Extracting archive

- Installing sabre/vobject (4.5.4): Extracting archive

- Installing sabre/event (5.1.4): Extracting archive

- Installing sabre/http (5.1.10): Extracting archive

- Installing sabre/dav (4.6.0): Extracting archive

- Installing psr/http-message (2.0): Extracting archive

- Installing psr/http-factory (1.0.2): Extracting archive

- Installing psr/http-client (1.0.3): Extracting archive

- Installing horde/http (dev-FRAMEWORK_6_0 as 3.0.0alpha8): Symlinking from /srv/git/horde/Http

- Installing pear/pear (v1.10.14): Extracting archive

- Installing pear/xml_util (v1.4.5): Extracting archive

- Installing pear/structures_graph (v1.1.1): Extracting archive

- Installing pear/console_getopt (v1.4.3): Extracting archive

- Installing pear/archive_tar (1.4.14): Extracting archive

- Installing horde/template (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Template

- Installing horde/share (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Share

- Installing horde/sessionhandler (dev-FRAMEWORK_6_0 as 3.0.0alpha3): Symlinking from /srv/git/horde/SessionHandler

- Installing horde/prefs (dev-FRAMEWORK_6_0 as 3.0.0alpha7): Symlinking from /srv/git/horde/Prefs

- Installing horde/pack (dev-FRAMEWORK_6_0 as 2.0.0alpha5): Symlinking from /srv/git/horde/Pack

- Installing horde/notification (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Notification

- Installing horde/compress (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Compress

- Installing horde/mime_viewer (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Mime_Viewer

- Installing horde/logintasks (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/LoginTasks

- Installing horde/lock (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Lock

- Installing horde/javascriptminify (dev-FRAMEWORK_6_0 as 2.0.0alpha5): Symlinking from /srv/git/horde/JavascriptMinify

- Installing psr/http-server-handler (1.0.2): Extracting archive

- Installing psr/http-server-middleware (1.0.2): Extracting archive

- Installing horde/http_server (dev-FRAMEWORK_6_0 as 1.0.0alpha2): Symlinking from /srv/git/horde/Http_Server

- Installing horde/cli (dev-FRAMEWORK_6_0 as 3.0.0alpha6): Symlinking from /srv/git/horde/Cli

- Installing horde/autoloader (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Autoloader

- Installing horde/auth (dev-FRAMEWORK_6_0 as 3.0.0alpha7): Symlinking from /srv/git/horde/Auth

- Installing horde/alarm (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Alarm

- Installing horde/core (dev-FRAMEWORK_6_0 as 3.0.0alpha17): Symlinking from /srv/git/horde/Core

- Installing horde/dav (dev-FRAMEWORK_6_0 as 2.0.0alpha5): Symlinking from /srv/git/horde/Dav

- Installing horde/rpc (dev-FRAMEWORK_6_0 as 3.0.0alpha6): Symlinking from /srv/git/horde/Rpc

- Installing horde/image (dev-FRAMEWORK_6_0 as 3.0.0alpha6): Symlinking from /srv/git/horde/Image

- Installing horde/form (dev-FRAMEWORK_6_0 as 3.0.0alpha6): Symlinking from /srv/git/horde/Form

- Installing horde/argv (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Argv

- Installing horde/horde (dev-FRAMEWORK_6_0 as 6.0.0alpha7): Symlinking from /srv/git/horde/base

- Installing horde/yaml (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Yaml

- Installing horde/cli_modular (dev-FRAMEWORK_6_0 as 3.0.0alpha5): Symlinking from /srv/git/horde/Cli_Modular

- Installing horde/hordectl (v1.0.0alpha4): Extracting archive

- Installing horde/routes (dev-FRAMEWORK_6_0 as 3.0.0alpha6): Symlinking from /srv/git/horde/Routes

- Installing horde/test (dev-FRAMEWORK_6_0 as 3.0.0alpha7): Symlinking from /srv/git/horde/Test

- Installing pear/console_color2 (0.1.2): Extracting archive

- Installing pear/console_table (v1.3.1): Extracting archive

81 package suggestions were added by new dependencies, use `composer suggest` to see details.

Generating autoload files

Applying /presets for absent files in /var/config

Looking for registry snippets from apps

Writing app configs to /var/config dir

Linking app configs to /web Dir

Linking javascript tree to /web/js

Linking themes tree to /web/themes

1 package you are using is looking for funding.

Use the `composer fund` command to find out more!

This composer environment works just like a regular installation. When you install turba, kronolith or passwd through composer, it will end up linking these apps and their library dependencies from the development tree.

Composer provides an option to copy files from the repositories rather than link the files. This would allow creating archives with artifacts for distribution packaging. The horde-components tool does not yet provide a switch to generate the necessary tweak to the repository files.